|

Ublas : |

Subject: [ublas] improving the benchmarking API

From: Stefan Seefeld (stefan_at_[hidden])

Date: 2018-09-12 02:07:55

Hi there,

I have been looking at the existing benchmarks, to see how to extend

them to cover more functions as well as alternative implementations. The

existing benchmarks have a few shortcomings that I would like to address:

* a single benchmark executable will measure a range of operations, and

write output to stdout. It's impossible to benchmark individual operations

* operations are measured with a single set of inputs. It would be very

helpful to be able to run operations on a range of inputs, to see how

they perform over a variety of problem sizes.

* the generated output should be easily machine-readable, so it can be

post-processed into benchmark reports (including performance charts).

(The above will be particularly useful as we are preparing PRs to

include support for OpenCL backends (work that has been done by Fady

Essam as a GSoC project).

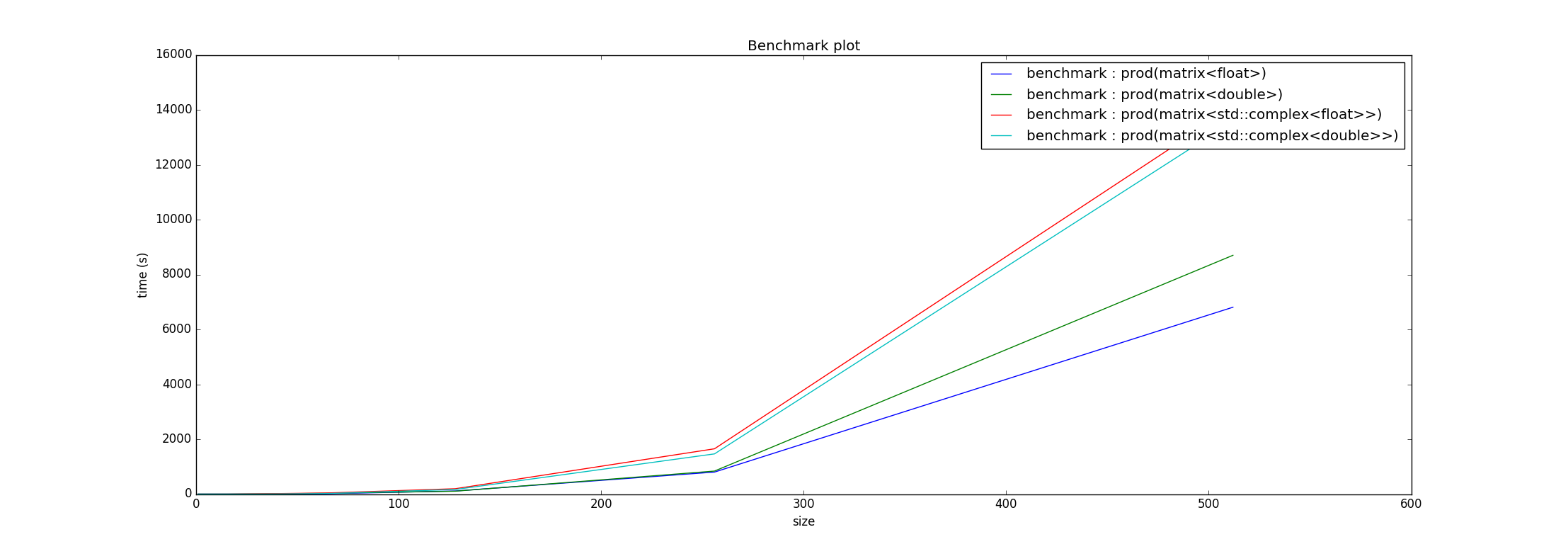

I have attempted to prototype a few new benchmarks (matrix-matrix

products, as well as matrix-vector products, for a variety of

value-types), together with a simple script to produce graphs. For

example, the attached plot was produced running:

```

.../mm_prod -t float > mm_prod_float.txt

.../mm_prod -t double > mm_prod_double.txt

.../mm_prod -t fcomplex > mm_prod_fcomplex.txt

.../mm_prod -t dcomplex > mm_prod_dcomplex.txt

plot.py mm_prod_*.txt

```

I'd appreciate any feedback, both on the general concepts, as well as

the code, which is here: https://github.com/boostorg/ublas/pull/57

Thanks,

Stefan

--

...ich hab' noch einen Koffer in Berlin...